If you’re a person of colour, then you know that representation in tech has always been limited, not just in the workforce but in the actual tech itself. Resulting issues in tech are many, with examples being white balance in cameras, heart rate monitors in some early smart watches, and some facial recognition systems not working for those with darker skin tones. Technology intended for use by everyone, but where designs marginalise certain parts of society, or only consider certain groups, are problematic. Although there have been gains in rectifying issues in certain products, in tech, there is still a long way to go.

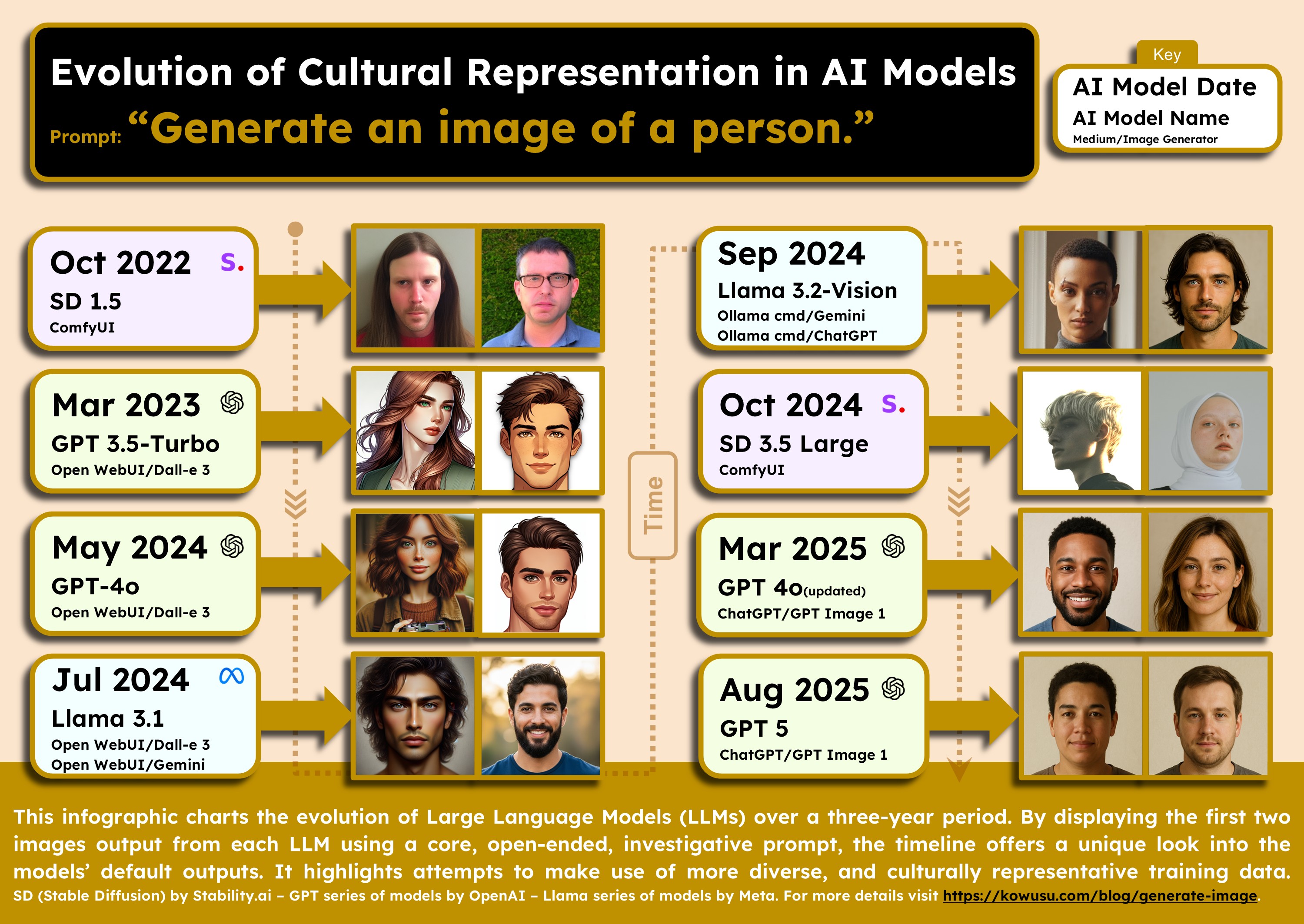

Is generative AI going to replicate these same issues on a large scale, or is this now an opportunity to fix it? I took a bit of a deep dive to find out what is happening, and my conclusion? Things are getting better, but we still have a long way to go! I decided to investigate the training of generative AI models by using a simple prompt ‘Generate an image of a person’. This simple, vague, open ended question allowed me to probe the underlying training of each model I tested. Without a specific instruction, the model itself had to come up with its own default. From that prompt, I took the first two image results from 8 different AI models, with release dates that spanned over the last 3 years. The results of how AI models have progressed are really interesting! Let’s take a look.

The Test Method

-

I chose 8 different generative AI models of varying release dates from, Oct 2022 to Aug 2025 (Stable Diffusion 1.5 and 3.5; GPT-3.5-Turbo, 2 versions of GPT4o, GPT5; Llama 3.1 and 3.2-vision) and asked the same or a similar question to each one (see the infographic for the specific dates).

-

Only one step (text prompt->image generation) was taken for models on AI platforms/user interfaces that had built-in image generation, such as ChatGPT, Gemini or the ComfyUI (locally run UI system for AI image, audio and video generation).

-

Two-steps were required for models that could only generate text outputs, such as anything involving the Ollama AI platform (a way to run models on local computers) or Open WebUI (a user interface that can be used with Ollama).

-

Step 1 was to use the prompt ‘Generate a description of the physical appearance of a person’ to create a text description.

-

Step 2 was to feed the text description from step 1 into an image generation tool such as ChatGPT, Gemini or Dall-E 3 via Open WebUI.

-

Although multiple images/descriptions were generated for each model, only the first two images/descriptions were used. This is because the first few images would be more representative of the more dominant core training data. The more images generated, the higher the probability of drawing from data outliers.

The release date is an important factor. Large Language Models (LLMs) are essentially time capsules. Once a particular model is trained on certain data, the base training process is not changed. So although results may change slightly due to minor tweaks made, the underlying data is still the same. So model gpt-3.5-turbo-0613 from 13th June 2023 has the same training data now that it had when it was released. Any major changes would result in a different model being created with a different name, e.g. gpt-3.5-turbo-0125 a similar model from above, but released on 25th January 2024.

Why now?

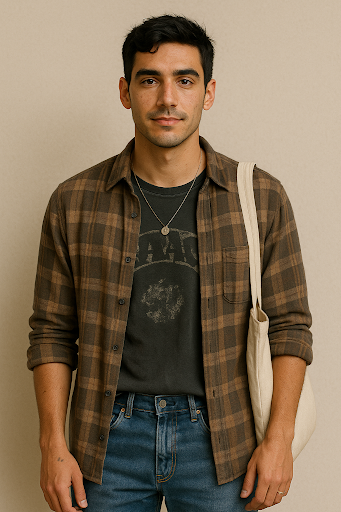

Although this actual test was conducted during the period of about a week or so in October of 2025, I had been probing AI models with this very question for the last 18 months. Through my intermittent testing, in October 2025 I got this image.

This was the first image of an obviously ethnically non-white person I had generated from the simple prompt on the first attempt, in the 18 months since I started trying. So I was quite surprised. I immediately tried GPT4o and got this next image. It was striking because it was the first time any model had produced an image that resembled my own ethnic background, a concrete sign that training data had finally begun to reflect a broader range of human diversity.

It was striking because it was the first time any model had produced an image that resembled my own ethnic background, a concrete sign that training data had finally begun to reflect a broader range of human diversity. But I had to be sure that my previous ChatGPT conversations had not influenced the outcome. I tried a guest account in incognito mode and got a description very similar to that of the first image (no image generation in guest mode). This confirmed that the model's outputs were not influenced by my personal conversation history or profile data. Interestingly, the description was intentionally vague, not mentioning gender or specific ethnicity. This seemed to be a sign of bias mitigation, recent deliberate algorithmic changes within models attempting to avoid defaults by being hyper-vague. Before this, the output images had been quite homogenous, time and time again, signifying a limited training data set. These recent images were a sign to me that the collection of readily available models on the market now had enough variance in their training data and outputs to find interesting results and to see some sort of progression.

Thoughts on the results

Caveat: I am using the visual diversity in the images generated as an indicator of cultural diversity in the training data and how that data is interpreted. I cannot be 100% certain that this is an accurate assertion. However the nature of LLMs as described in these articles, points towards visual representation being the most immediate and observable indicator of representational breadth in training data, as models directly reflect the distribution and interpretation of the data they were trained on.

It is quite clear that up to May 2024, there was little diversity within the training sets. Llama 3.1 in July 2024 gave the first glimpse of some diversity, with the images looking less Western European and more Middle Eastern/Mediterranean. September 2024 was the first time a person of colour was explicitly generated. However, the exact visual representation of the woman that we see is largely an interpretation of the image generation tool, and not entirely due to the description from the Llama 3.2-vision model. The text description was generated first via Ollama using the command prompt on my computer. This was then passed to Gemini which seems to have added some artistic flair to the description. The specific description was

‘‘The skin tone is a smooth, even medium brown”

and could just as easily have been of a person from the Middle East, South Asia or South East Asia. There was also no description whatsoever of gender, which was another addition by Gemini when the image was generated. It is clear that each stage of the image generation process in the two-step process (1. generation of description and 2. interpretation of that description) has a separate effect - the resulting image is not just a result of the text description. Looking deeper into the method employed by the one step platforms such as Gemini, ChatGPT and ComfyUI will show a similar influence as each has a description generating step to answer the prompt and a separate image generator to interpret the description.

Stable Diffusion 3.5 released in October 2024 was very interesting. Although both images generated were of Western individuals, both were very different from the previous images produced from the first 3 models. The first was of what seemed like a Scandinavian or Eastern European person, and the second was of a woman wearing a head scarf, possibly Muslim or Christian. This is the first time I had generated an image of a person wearing some sort of cultural or religious attire from my vague prompt, showing an increased awareness of these concepts within the model. The final two models generated the images that I have already discussed and represented a further increase in diversity of training data set.

Part 2 - Local Model testing

Even though there has been significant change in the cultural and ethnic representation of the output images, it is still not fully representative of all populations. There was no visible representation of South American or Asian cultures, for example. My next thought was to do a test on models that were designed for Non-Western audiences to see how they would do with this test. I focused on the following 3 models: Falcon3 from the UAE, Llama 3.1 Swallow from Japan, and Qwen3 from China. All of these were run via Ollama so image generation was a 2 step process. Due to the vagueness of some of the outputs, the prompts for these were longer, as I asked the models to describe specific features, such as skin colour and hair colour. However, all prompts were just as non-directional as the prompts for part 1. I initially probed each of the 3 in English to try and generate a description of an image. The descriptions were then put into either ChatGPT or Gemini for image generation. All resulting descriptions of physical characteristics and corresponding images were of people with Western features as seen below.

|

|

|

| Chinese Model (Qwen3) | UAE Model (Falcon3) | Japanese Model (Swallow) |

I then decided to try and probe the models by talking in their native languages (using translation). Talking in Mandarin to Qwen generated a description of another Western person. Talking in Arabic to Falcon was extremely difficult. This is because the responses were very poetic and refrained from any form of detailed description of physical characteristics wherever possible, even when prompting was specific and instructional. The best I could get was a description of another person with Western features. The Japanese model, however, was very receptive to receiving Japanese language instructions and was the only one that generated a description of a Japanese person.

|

|

|

| Chinese Model (Qwen3) | UAE Model (Falcon3) | Japanese Model (Swallow) |

This was quite an interesting outcome. I initially thought that the LLMs that were used by the different nations were versions of existing models created by Western companies, most likely Meta's Llama, which retained a considerable amount of initial training data. However, looking deeper into the origins of the models highlights that the Japanese model was the only one that still had a significant amount of influence from the Llama 3.1 model. Although early versions of Qwen were based on Llama models, both the Falcon3 and Qwen3 models had no influence and were built from the ground up. However, because all models relied heavily on datasets based on internet contents , and English language is predominant in internet materials, similarities between the models and significant Western influence still resides within them. This is irrespective of how much local cultural content is used to train the models. When prompts were written in their local languages, one of the 3 responded to the prompt by producing an image directly linked to the culture (Llama 3.1 Swallow by Japan), and even though the UAE model did not produce the desired image, the language it used was influenced heavily by Arabic culture. I imagine that the difficulty in getting it to describe the physical characteristics of a person were likely influenced by cultural practices, as UAE rules and policies around portrayal of personal images (e.g. publicly taking and sharing pictures without consent) are extremely strict, as are policies on AI depictions of cultural sites and national symbols.

Conclusion

Within the study, I used facial features and skin tones in output images generated by AI models as an indicator for cultural and ethnic diversity within the AI models' training data, which is simplistic and has its obvious limitations. However, important and insightful conclusions can still be drawn. The results I got from this two part study were of great interest to me. Although there is still a lot of caution around the use of generative AI for legal copyright and personal security reasons, cultural representation within technology remains an area that is lacking. So any improvement in that space, as has been seen with the progression of the AI models in part 1, is a step in the right direction. However, there was still an obvious lack of representation still present. For the part 2 - local model testing, I get two important conclusions. 1. It is clear that the English-language training data is powerful, and should be considered when creating prompts, by intentionally including requests for more localized consideration when it is important for the user. 2. The localised cultural fingerprint of the models is also strong, and goes beyond simple visual diversity, and can be used to level or even overpower the English-language training tendency.

Like any exploratory study, this one had limitations. It could easily have been improved by using a larger number of more powerful models with more variance in release dates for the first part, and a larger number of localised models in the second part, including more than just 3 countries. However, the study and the results satisfied my curiosity, and I will continue to test upcoming models, hoping to see a continued increase in diversity. Ultimately, cultural representation in AI models is not just a question of fairness but of how these systems learn to see humanity. This leads me to my next post, where I will delve into the design practices of OpenAI, Meta, and Google to show how cultural misalignment threatens international education, and what we can do to mitigate the effects.

For full, uncropped images and text descriptions please visit this Google Doc.

Links to similar research articles

Stable bias: evaluating societal representations in diffusion models

Partiality and Misconception: Investigating Cultural Representativeness in Text-to-Image Models

This is how AI image generators see the world

What Does AI Think a Chemist Looks Like? An Analysis of Diversity in Generative AI

Cultural Bias in Text-to-Image Models: A Systematic Review of Bias Identification, Evaluation and Mitigation Strategies

Study finds gender and skin-type bias in commercial artificial-intelligence systems